On Oct. 2, the University of New Mexico hosted a webinar Q&A with filmmaker Shalini Kantayya, the creator of a 2020 documentary about the racial bias in artificial intelligence called “Coded Bias.”

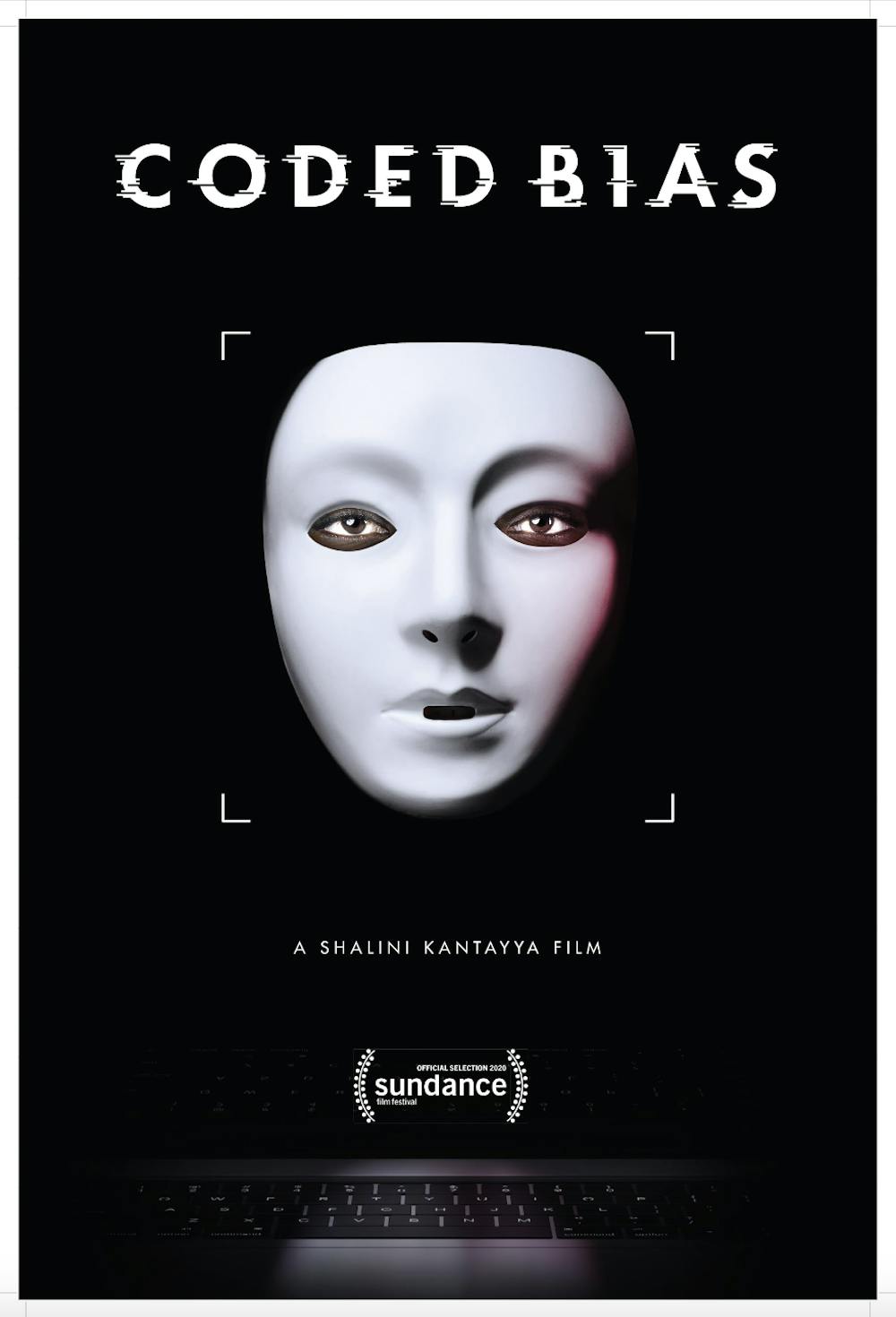

“Coded Bias” started with Kantayya’s attempt to empower herself. She intended to make a mirror that would superimpose inspiring images — such as a lion’s head to channel strength — over her face. However, in trying to make the mirror, she realized that facial recognition technology wasn’t detecting or working on her face. It would, however, recognize an uncanny white mask as a face.

Kantayya realized that the faces used to train facial recognition technology were predominantly those of white men. The machine had never been taught that faces like hers — a Black woman — were faces too, meaning it struggled to recognize her.

Kantayya said she first “fell down the rabbit hole” when she read the book “Weapons of Math Destruction” by Cathy O’Neil.

“I was surprised by the extent to which we as human beings are outsourcing our autonomy to machines in ways that really change lives and shape human destinies,” Kantayya said. “And that's when I really began to understand that AI is radically changing who gets hired, who gets health care, even how long a prison sentence someone may serve.”

The discovery led Kantayya down to uncover the ways in which data and algorithms dictate our lives and the ways in which said algorithms are biased, though often unintentionally.

As the lead in the documentary, Joy Buolamwini, says, “Data is destiny.”

It further explains that AI replicates the world as it is and as it has been. This means the biases of our history are present and exacerbated by AI. The film tells the story of Amazon’s experiment with AI in hiring.

Kantayya explained that AI regulation is currently very loose — in some cases, almost nonexistent. Its biggest problem is that it’s “not keeping pace with the technology,” she said.

Another one of the primary concerns is who owns the data, who owns the code, and the data that data goes into the code.

“I don't think I could even find numbers on people of color AI researchers,” Kantayya said. “So that is abysmal — worse than being a woman doctor in the 1940s.”

Facial recognition and AI also poses a civil rights issue, the documentary notes. Often, people’s faces are added to databases without their consent. (And too often, facial recognition is bad at distinguishing faces of color, according to the documentary.)

Get content from The Daily Lobo delivered to your inbox

The documentary specifically notes the problem that AI has caused with false positives in matching individuals to “wanted” lists, even when the person was innocent.

Concerns that were raised four years ago in the film are still relevant now, as tools like ChatGPT become more popular and lives are increasingly automated — even in artistic mediums such as film.

“The role of arts is sometimes to give us a reflection of the world that we're living in and to help us dream of new futures,” Kantayya said. “I will say that I'm very concerned about what's happening as AI becomes the cultural producer and the artist … What I worry about is that we often talk about these platforms as free speech, as our public squares. But they're not. They're multi-billion dollar tech companies.”

Kantayya is still working on films related to technology. In 2022, she created a documentary called “TikTok, Boom” and is currently working on a project about dating apps.

Addison Fulton is the culture editor for the Daily Lobo. She can be reached at culture@dailylobo.com or on X @dailylobo