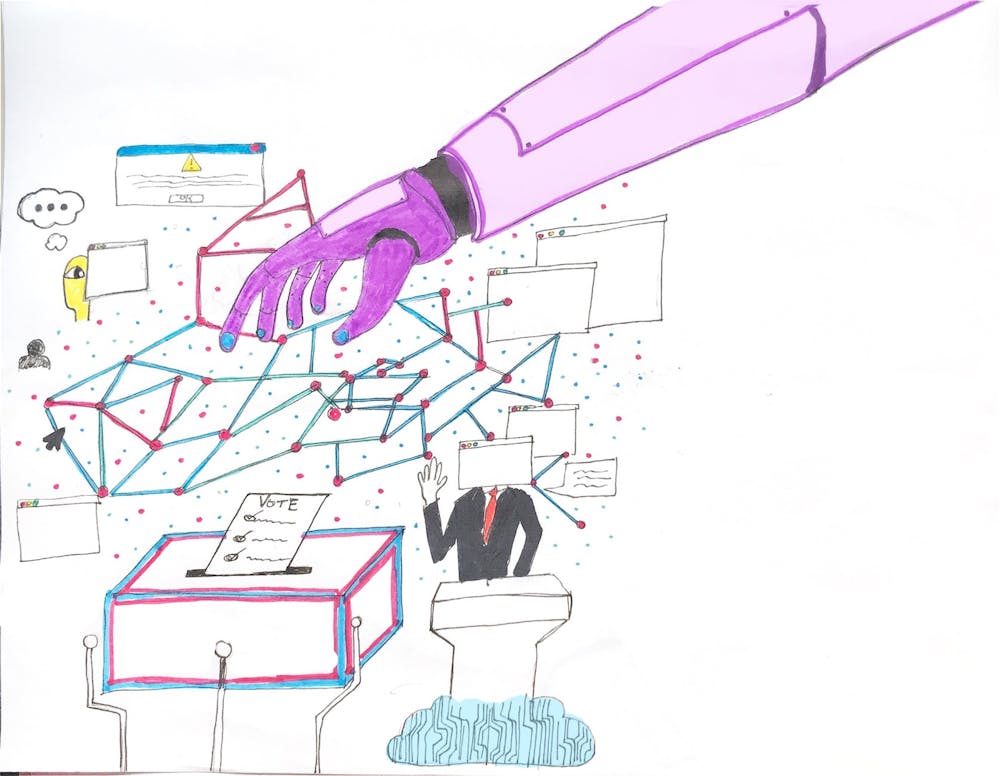

On Aug. 18, former President Donald Trump posted a series of images to Truth Social, one of which was real.

The rest were AI-generated images of Taylor Swift fans endorsing and supporting him. The post was captioned, “I accept!”

This is not the first instance of AI being used to distort information regarding the election. Also on Aug. 18, Trump's official X account posted an AI-generated image of a Kamala Harris rally decorated with communist symbols and regalia. The post has over 81 million views.

On Aug. 5, New Mexico Secretary of State Maggie Toulouse Oliver cosigned a letter to Elon Musk, urging him to fix election misinformation spread by Grok, the AI search assistant available to X Premium and Premium+ subscribers. Helmed by Minnesota Secretary of State Steve Simon, the letter was also signed by the secretaries of state in Michigan, Pennsylvania and Washington.

After President Joe Biden withdrew from the presidential race, Grok claimed that Harris had missed the ballot deadline in nine states, including the states that the letter’s signees represent, according to the letter.

“Grok continued to repeat this false information for more than a week until it was corrected on July 31,” the letter reads.

In May, a political consultant faced charges after an AI deepfake of Biden’s voice called New Hampshire Democrats and discouraged them from voting, according to NPR.

Alexzander Bowen, a psychology student at the University of New Mexico, expressed his frustration with how rampant AI use has become on the internet in the past few years.

“It’s tiring how people use AI imagery to fearmonger, spread misinformation or further an agenda,” Bowen said.

The misinformation that AI spreads across the internet has dangers that extend far beyond this upcoming election, Bowen said. AI algorithms “can easily radicalize someone just by bombarding them with specific posts that are related or fabricated,” he said.

Toulouse Oliver’s website has a guide teaching voters how to detect AI-generated photos, videos and audio. It lists indications that an image may be AI, including “irregularities in human features,” “skin that appears too smooth or too wrinkly” and “flat, dry tone.”

Jessica Feezell, an associate professor of political science at UNM who focuses on the influence of media, said AI has the potential to impact voter behavior this election.

Get content from The Daily Lobo delivered to your inbox

“I think that misinformation and disinformation is nothing new to politics,” Feezell said. “The difference in the last few election cycles is the ability and ease with which people can share information of poor quality, or intentionally misleading, information to large audiences through different social media platforms.”

Feezell said the technology itself doesn’t worry her, but rather human misuse of technology.

“What concerns me is people using AI for nefarious purposes,” Feezell said. “What happens still comes down to what humans chose to do with it. The intention of humans is what makes me most worried — but also most optimistic, because this is something we can educate against and work to improve.”

Feezell stressed the importance of education, fact-checking, and using reliable, traditional sources to get information about the election.

David Gonzalez, a philosophy student at UNM, said he feels that AI must be regulated in order to ensure free and fair elections. He worries that AI targets older voters who know less about generative AI, he said.

“AI is a genie out of the bottle; it is here to stay and ruin older people’s decision-making. Unregulated AI is a threat to our elections and our political system as a whole,” Gonzalez said. “AI should be a tool, not a weapon. We designed it, we developed it, it is our duty to ensure that it cannot be abused.”

Addison Fulton is the culture editor for the Daily Lobo. She can be reached at culture@dailylobo.com or on X @dailylobo

Elijah Ritch is a freelance reporter for the Daily Lobo. They can be reached at culture@dailylobo.com or on X @dailylobo